Your daughter is watching videos about butterflies. Fifteen minutes later, you glance at the screen and see something involving a cartoon character and… is that blood? You didn’t touch her tablet. She didn’t search for anything new. The algorithm did exactly what it was designed to do.

I’ve watched this play out with my own kids—across different ages, different devices, different starting points. And my librarian brain couldn’t let it go without understanding how, exactly, we got here.

Key Takeaways

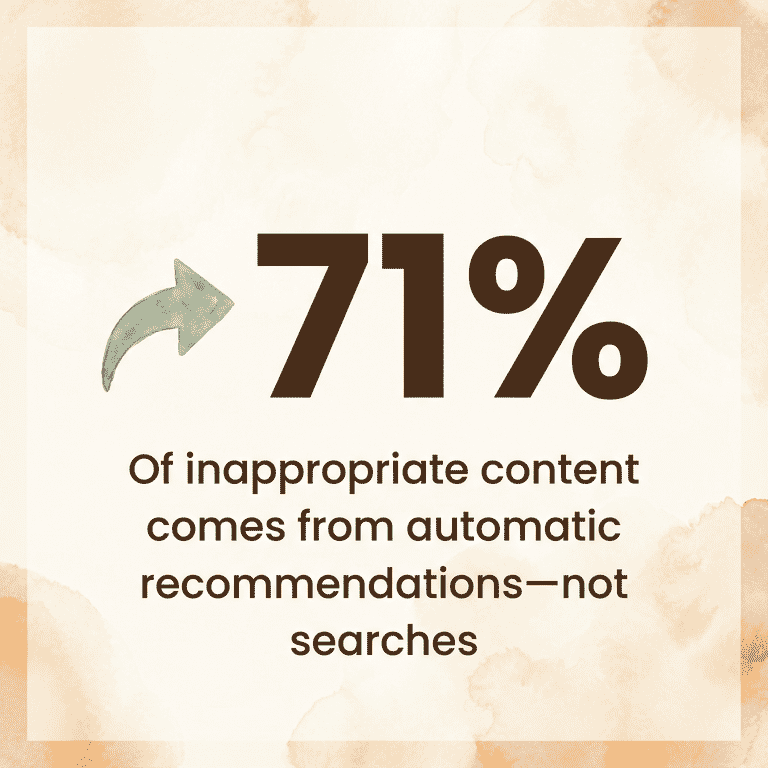

- 71% of inappropriate content children see comes from YouTube’s automatic recommendations—not searches

- How often kids access YouTube matters more than total screen time—many short sessions cause more behavioral problems than fewer long ones

- YouTube Kids still shows concerning content because creators self-categorize their videos and the system can’t verify honesty

- Three simple settings changes can dramatically reduce your child’s exposure to algorithm-driven inappropriate content

The Promise vs. The Reality

Let me be honest: I understand why parents rely on YouTube Kids. When you’re making dinner and your two-year-old is melting down, a device that instantly delivers content they love feels like a lifeline. YouTube works. The kids are quiet. Everyone survives until bedtime.

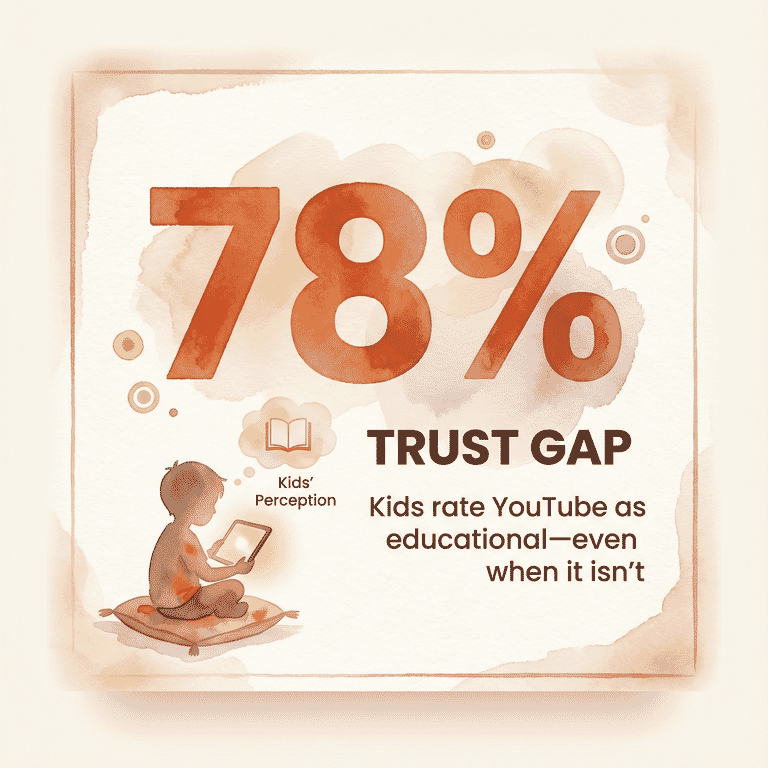

Plus, our children genuinely believe they’re learning something. Research from Ohio State University (2020) found that children rate YouTube content as more educational than television or smartphone videos—regardless of whether the content actually is educational. Kids are primed to trust the platform before they even hit play.

Here’s where the gap opens up: what YouTube promises parents and what the algorithm actually delivers are two different things. The platform was designed to maximize engagement, not age-appropriateness.

And “engaging” content for a developing brain often means content that’s shocking, dramatic, or emotionally provocative.

This disconnect matters more as we explore how screens became the default gift for children. When tablets become a go-to present, YouTube becomes the default activity—and the algorithm becomes a constant presence in your child’s cognitive development.

Inside the Recommendation Engine

Here’s what the research actually shows about how YouTube’s recommendation system works with children.

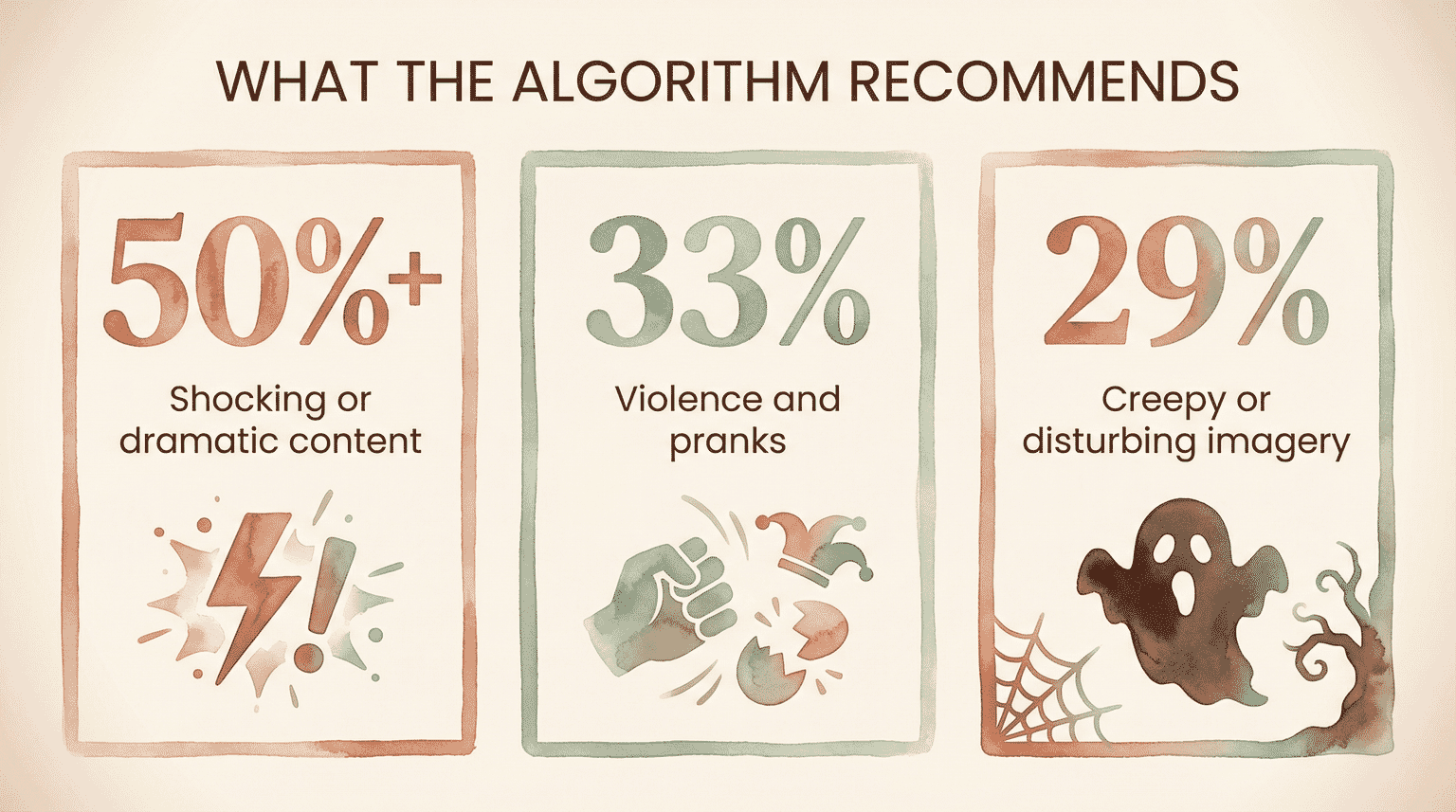

A 2024 study from University of Michigan Health analyzed nearly 3,000 video thumbnails that were recommended to children after they searched for common topics like Minecraft, Fortnite, and memes. The findings were stark:

- More than 50% contained “shocking, dramatic, or outrageous” messaging

- Approximately 33% included violence, peril, and pranks

- 29% featured “creepy, bizarre, and disturbing” imagery

This wasn’t content children searched for—it was content the algorithm pushed toward them.

A Mozilla Foundation investigation found that 71% of inappropriate content reported by users came from YouTube’s automatic recommendation system, not from direct searches. The algorithm, not your child, is choosing what appears next.

Platform safety researchers have documented what they call the “13-click problem”: starting from legitimate BBC children’s content, it took only thirteen clicks on recommended videos to reach disturbing content featuring children’s characters committing suicide.

Each click felt reasonable in the moment. The destination was not.

Understanding the psychology behind why unboxing videos captivate children helps explain what kinds of content the algorithm favors—anything that triggers emotional responses and keeps small fingers tapping.

What Actually Gets Through

YouTube Kids was created in 2015 to solve these problems. It garners millions of weekly views from parents who reasonably assume “Kids” means “safe.”

It doesn’t.

Research from the Information Sciences Institute (2022) documented that YouTube Kids—the app specifically designed for children—has shown videos promoting drug culture and firearms to toddlers. The Tech Transparency Project found content discussing cocaine and crystal meth, instructions for concealing weapons, encouragement of skin bleaching, and introduction of diet culture to young children.

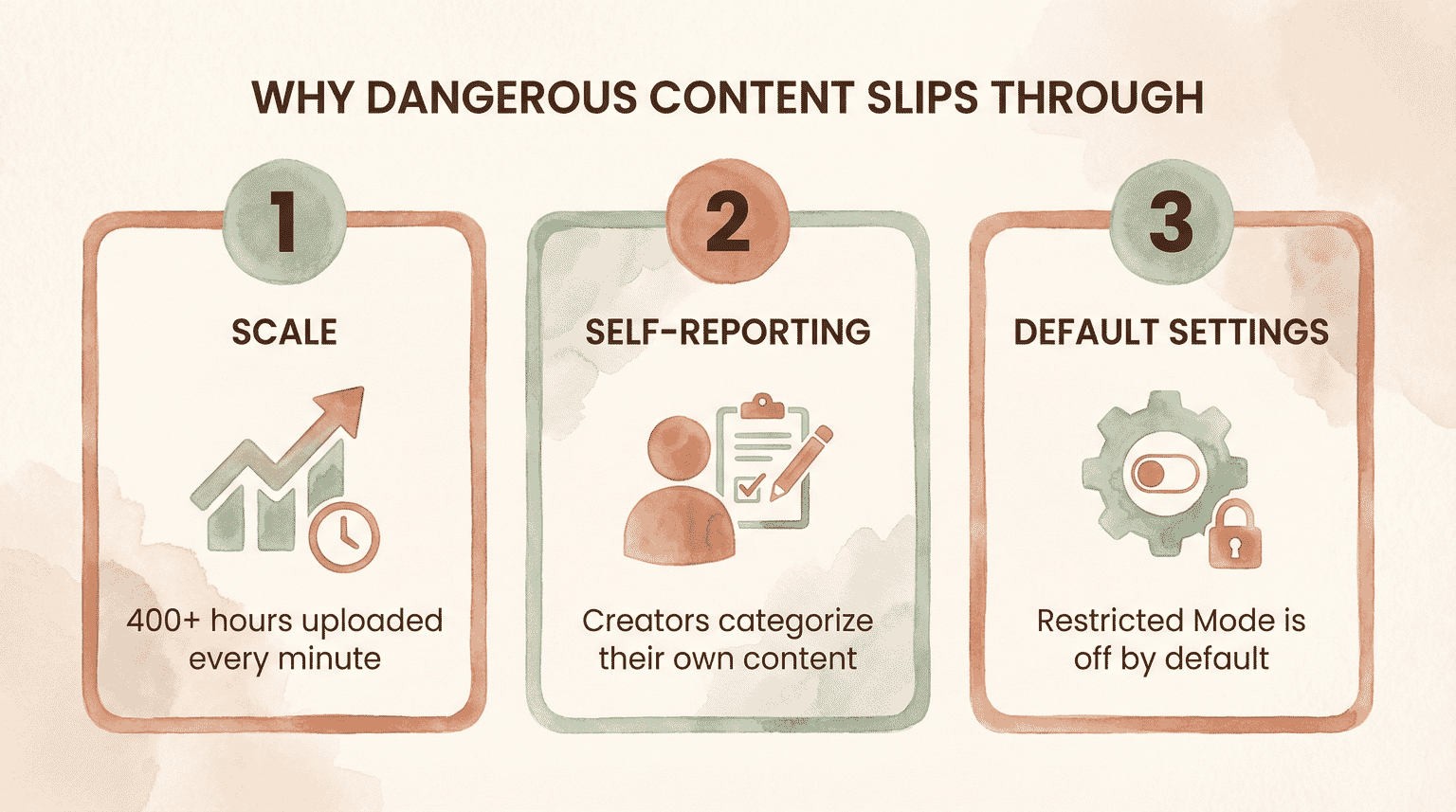

Why does this keep happening?

Scale is the first problem. With 400+ hours of video uploaded every minute, human review of everything is mathematically impossible.

Self-categorization is the second problem. Publishers decide whether their content is “appropriate for children.” They can lie. The system has no meaningful verification.

Default settings are the third problem. Restricted Mode is off by default. Parents who don’t know to enable it—and many don’t—are relying on a filter that isn’t active.

The implications of these failures extend beyond individual families. When platforms can’t protect children at scale, every parent is left playing defense.

“Platforms with billions of hours of content can’t perform human review of everything suggested to children and use algorithms that are imperfect. Parents and children need to be aware of the risks…”

— Dr. Jenny Radesky, Developmental Behavioral Pediatrician, University of Michigan Health C.S. Mott Children’s Hospital

The Brain Science of the Trap

If YouTube’s algorithm is problematic, why can’t children simply stop watching? Why does my 10-year-old act like the tablet is being surgically removed when I take it away?

The answer lies in developmental neuroscience.

According to the American Psychological Association, between ages 10-12, receptors for dopamine and oxytocin multiply in the ventral striatum—the brain’s reward center. This makes preteens extra sensitive to attention, validation, and the variable rewards that algorithmic content provides.

This biological reality means the algorithm isn’t just competing for your child’s attention—it’s exploiting a developmental window when their brain is literally wired to seek exactly what the platform provides.

The variable reward pattern of “what comes next?” mirrors slot machine mechanics. Each swipe or autoplay trigger activates that sensitized reward system.

“For the first time in human history, we have given up autonomous control over our social relationships and interactions, and we now allow machine learning and artificial intelligence to make decisions for us.”

— Mitch Prinstein, Chief Science Officer, American Psychological Association

Short-form video content presents additional risks. Research published in the Journal of Family Medicine and Primary Care (2025) identifies YouTube short video addiction as a specific subtype of internet addiction. These concise, fast-paced, visually captivating formats “foster prolonged engagement” through mechanisms that exploit developing reward systems.

When an engagement designer described YouTube as “one of the most sophisticated behavioral conditioning systems ever deployed on children,” comparing each click to a slot machine pull—I felt my stomach drop. I’ve watched my own kids in that trance state. Now I understand the mechanism creating it.

The Frequency Paradox

Here’s something that surprised me in the research—and it reframes how we should think about limits.

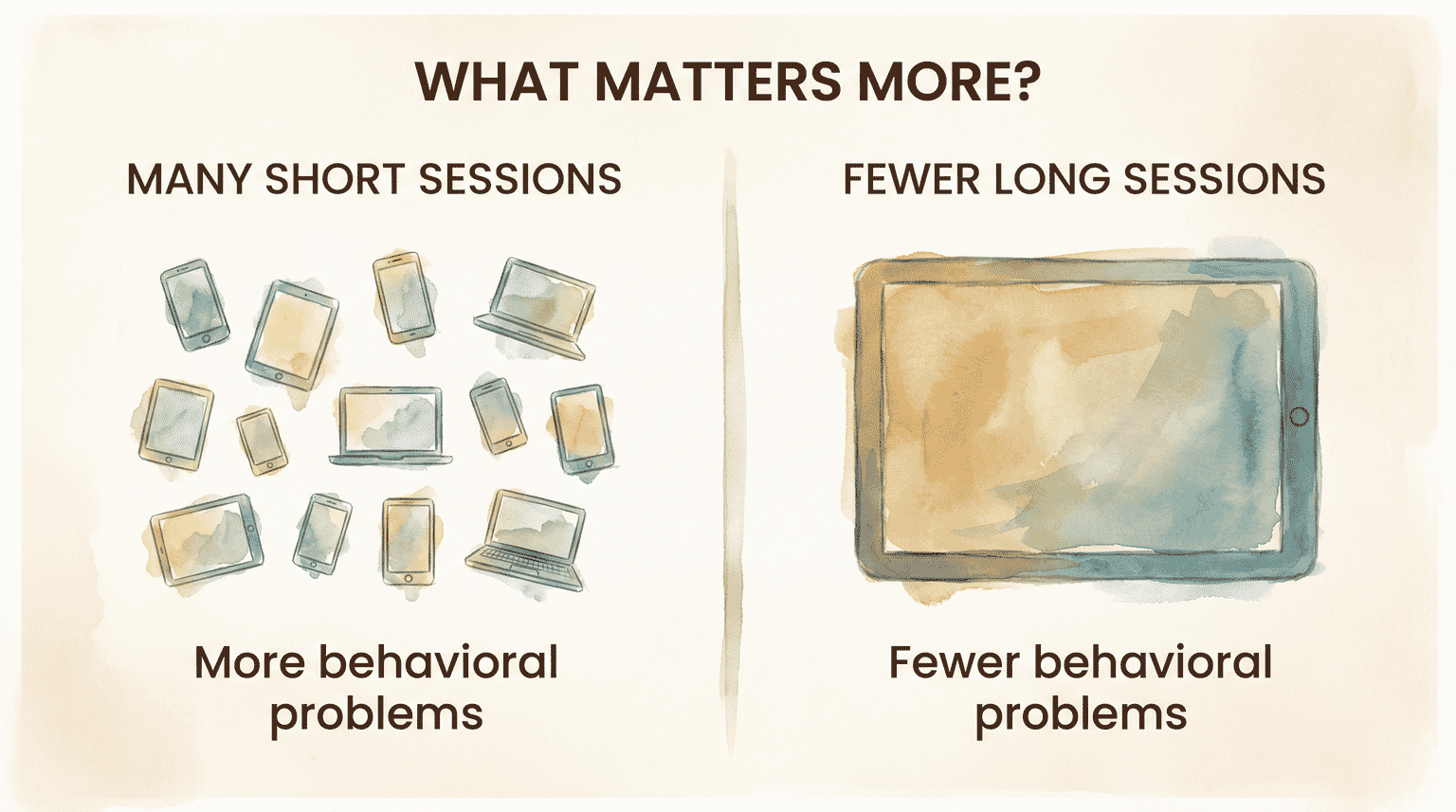

A 2024 longitudinal study from Korea’s K-CURE cohort tracked 195 children and found that usage frequency matters more than total duration. Children who accessed YouTube many times per day showed more behavioral problems than those who watched longer but less often.

Twenty-one percent of children in the study started using YouTube before age four. And younger age at first use was significantly associated with increased emotional and behavioral problems later.

The practical implications shift from “How many total minutes?” to “How many times is your child reaching for the screen?”

Many short sessions—the drip-drip-drip of quick videos throughout the day—may be more problematic than fewer, longer viewing periods. This challenges the standard screen time conversation most parents are having.

The Advertising Layer

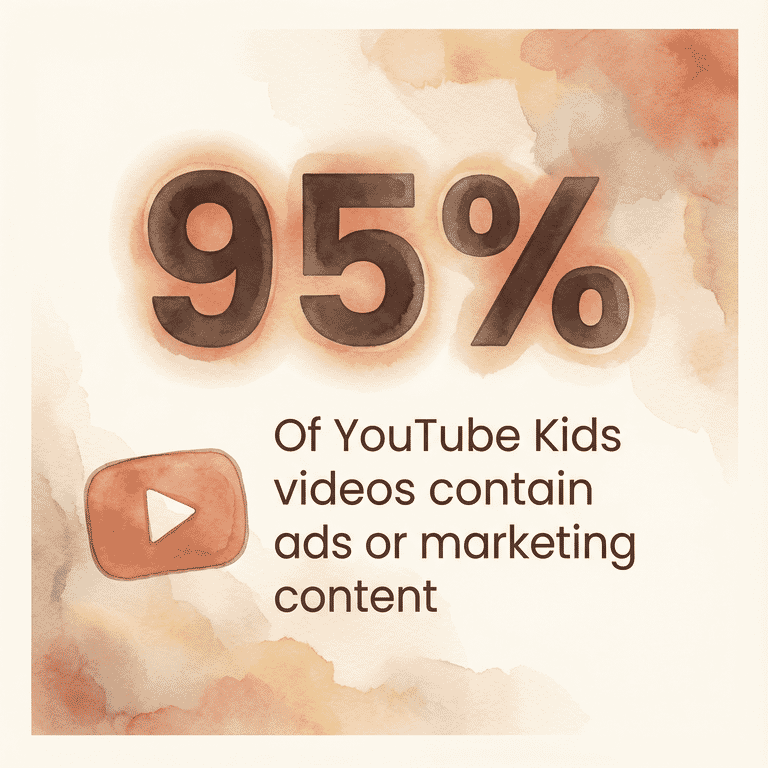

One more layer parents don’t always see: despite expectations that a “Kids” app would be ad-free, advertising penetrates YouTube Kids extensively. Common Sense Media research found that 95% of YouTube Kids videos contain advertising or marketing content.

Children cannot distinguish between content and advertising until around age 8, and even then, the distinction is difficult when ads are integrated into videos by trusted creators.

The parasocial relationships children form with YouTubers—emotional connections where they feel the creator genuinely knows and likes them—make these children more receptive to purchase messages, and less able to recognize them as marketing.

What Actually Works

After diving into this research, I want to be clear: I’m not saying YouTube must be eliminated from every household. In my house of eight kids, that’s not realistic. But understanding the mechanism changes how I approach it.

Alternative Platforms with Curated Content

- PBS Kids (free)—human-curated, no algorithm-driven recommendations

- Noggin (subscription)—educational focus without engagement-maximizing design

- Sesame Workshop apps—age-appropriate by design

The tradeoff: less variety, but dramatically reduced exposure to inappropriate content and addictive mechanics.

Settings That Matter Most

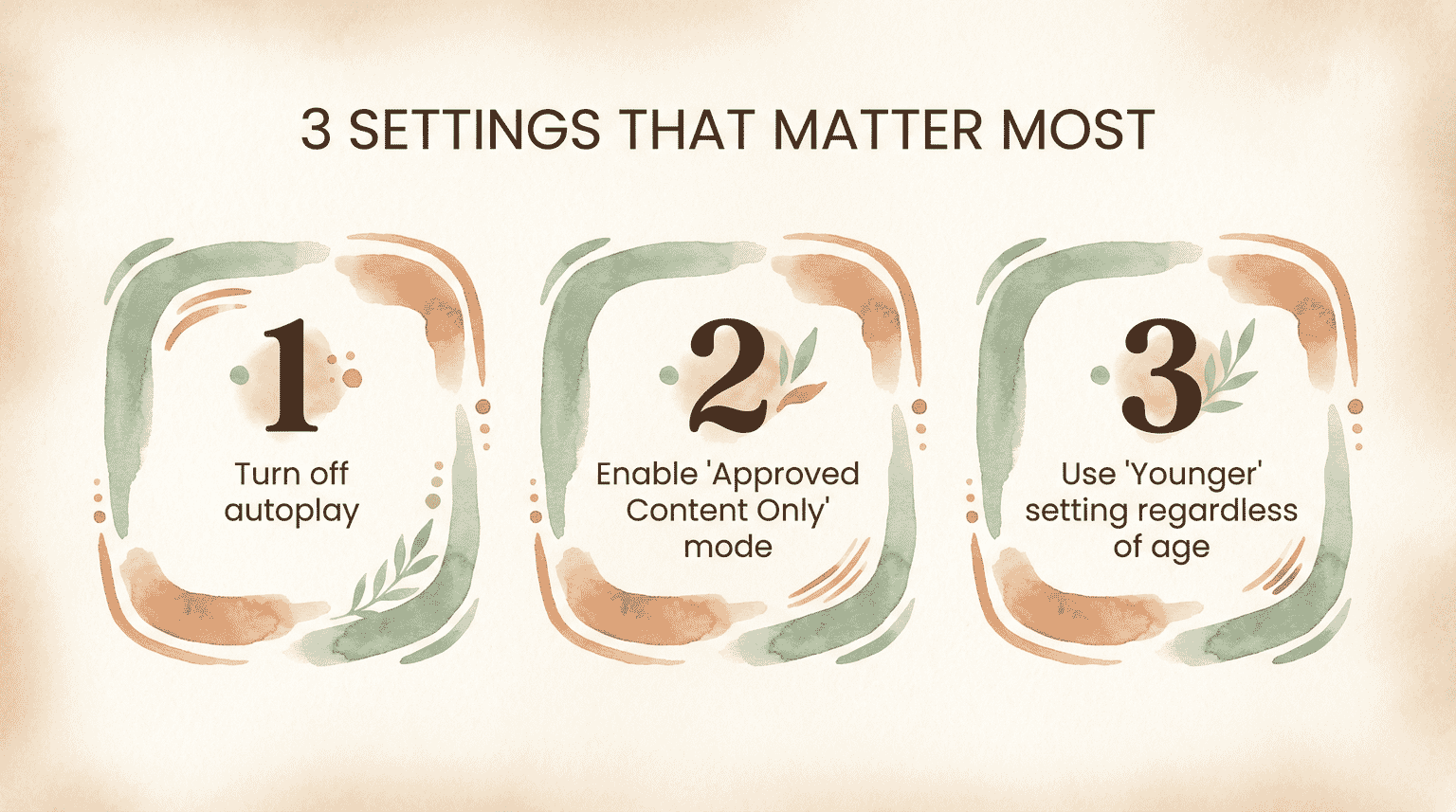

If you continue using YouTube Kids, these three changes make the biggest difference:

- Turn off autoplay—stops the recommendation engine from controlling what’s next

- Enable “Approved Content Only” mode—you pre-select every available video

- Use the “Younger” content setting regardless of your child’s actual age—applies more restrictive filtering

The Co-Viewing Approach

When parents watch with children and talk about what they’re seeing, the passive consumption dynamic shifts entirely. This is also the setting that correlates most strongly with positive language development outcomes in screen time research.

Navigating the pressure parents feel to document childhood online connects to this challenge—we’re all figuring out how digital platforms fit into family life.

The Decision Framework

I’m not going to tell you whether YouTube Kids is right for your family. But I can share the questions that help me think it through:

How old is your child? Under-4 exposure shows the strongest associations with later problems. The research is clear enough that delaying first use is protective.

What are you seeing behaviorally? Increased irritability after viewing, difficulty transitioning away from screens, requesting YouTube specifically when stressed—these patterns warrant attention.

What’s realistic for your family? Total elimination isn’t possible for everyone. Reducing frequency of access, enabling stricter settings, and shifting toward co-viewing may be more achievable.

What alternatives exist? Curated platforms require more effort to set up but fundamentally change the risk profile.

Mitch Prinstein’s warning sits with me:

“It’s even scarier to consider how this may be changing brain development for an entire generation of youth.”

— Mitch Prinstein, Chief Science Officer, American Psychological Association

I don’t want to be scared. I want to be informed—and I want the same for other parents. The algorithm isn’t going to change on its own. But understanding how it works gives us something we didn’t have before: the ability to make real decisions rather than just hoping for the best.

Frequently Asked Questions

Why does YouTube Kids show inappropriate videos?

YouTube Kids relies on content creators to self-categorize their videos as child-appropriate—they can lie. With 400 hours uploaded every minute, human review is impossible. Research shows 71% of inappropriate content exposure comes from automatic recommendations, not direct search.

Is YouTube Kids safe for 5-year-olds?

Research raises significant concerns. A 2024 study found that 21% of children started YouTube before age 4, and younger first use was significantly associated with later emotional and behavioral problems. Children this age cannot recognize inappropriate material independently.

How much YouTube is too much for kids?

Focus on frequency, not just duration. Korean researchers found many short sessions may be worse than fewer longer viewing periods. Children accessing YouTube many times daily showed more behavioral problems than those watching longer but less often.

What is better than YouTube Kids?

Apps with human-curated content include PBS Kids (free), Noggin (subscription), and Sesame Workshop apps. These don’t use engagement-maximizing algorithms or allow user-uploaded content—the tradeoff is less variety with significantly reduced risk.

How do I make YouTube Kids safer?

Turn off autoplay, enable “Approved Content Only” mode, and use the “Younger” content setting regardless of your child’s actual age. Even with these settings, supervised co-viewing remains the most effective protection.

What About You?

Has YouTube Kids served up something disturbing at your house? I’d love to hear what alternative apps have worked—and whether you’ve found anything that keeps kids entertained without the algorithm rabbit hole.

Your algorithm horror stories help other parents navigate this digital minefield.

References

- University of Michigan Health Study – Video thumbnail analysis research

- K-CURE Study (BMC Public Health) – Longitudinal research on YouTube usage patterns

- Information Sciences Institute Research – Inappropriate content classification study

- American Psychological Association – Social media and developing brains

- Ohio State University – Children’s perceptions of YouTube’s educational value

- Journal of Family Medicine and Primary Care – Short video addiction research

Share Your Thoughts